What do you imagine hearing the phrase “Artificial Intelligence” or “AI”? Is it the flying cars, suspiciously all-knowing google searches or creepily looking humanoid robots?

Whatever your response is, you are most probably right (or will be in the nearest future), but what I am pretty sure, you didn’t even think about – a soulless machine replacing your psychologist.

One might think that the author got crazy (or held hostage by Robot Sophia), but this scenario is closer to the reality than you could imagine. AI-based machines can distinguish emotional states, assess mental state by posture and even diagnose a patient with depression based on speech and facial expression. And who is crazy now?

AI: a bright reality and a promising future.

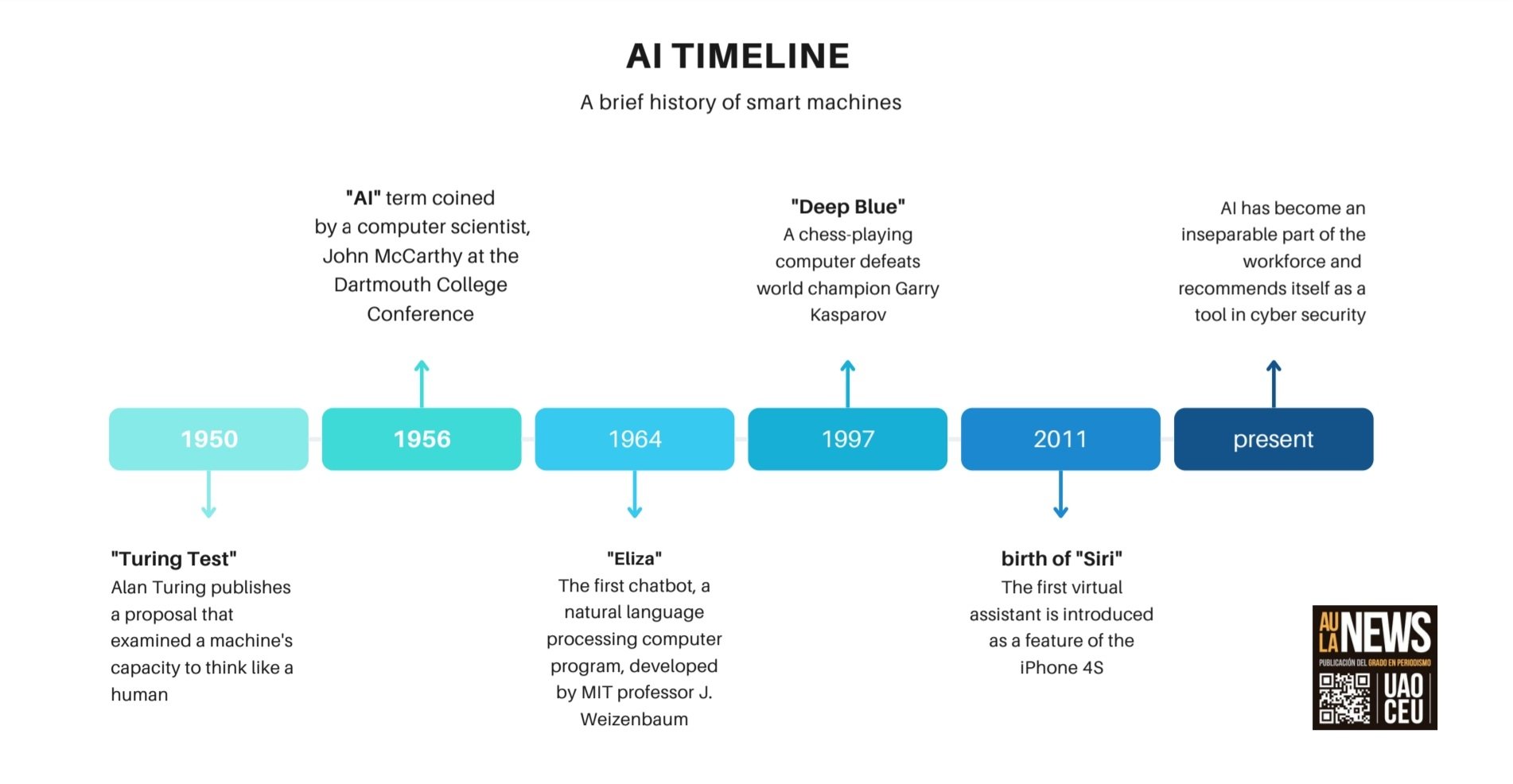

In the frame of a century we leaped from mentioning AI in sci-fi books to its usage on a daily basis.

The Artificial Intelligence became an irreplaceable tool in a variety of fields, from education to healthcare. A computer now is capable of matchmaking a patient and a needed doctor, giving a diagnosis and even making a prognosis. A deep understanding of complex problems is possible due to an immense database, which consists of digitalized health-care data of all formats from images to hand-written clinical notes.

However, the most fascinating achievements in AI application are describes in the review written by specialists in the field of engineering and technology. The main message is to show what unbelievable results might be achieved if the work towards simulation of the human cognition continued. The work highlighted three guidelines for AI learning human cognition: face attraction, affective computing and music emotion. Let’s take a closer look at some of them.

Reading from the face

You may not believe in love from the first sight or dismiss physiognomy as a nonsense, but you cannot deny that you form a first impression about the person in split seconds. The style, body language and look on their face tell us about personal characteristics, while gender, age and race about their background.

Moreover, according to the 55/38/7 formula, proposed by a body language researcher, Albert Mehrabian, communication is 55% nonverbal, 38% vocal and only 7% of words. Regardless of how much of the percentage break down is accurate, nonverbal communication in unquestionably the most important one. Thus, in order to replicate the normal dialogue robots had to go the long way. But who said it was easy?

The AI was trained on the dataset of 5500 faces with different facial features to assess facial beauty, recognize a gender and a race. Even though the concept of attractive face is quite controversial and well, in terms of machine learning, difficult to establish, the AI could reflect our understanding of aesthetic standards. The results showed that computers are capable of reading from faces, gathering information about emotions and feelings, proving that AI development based on cognitive psychology has potential.

Affective computing

At the end of the last century an American scientist, Herbert A. Simon, stated that problem solving should incorporate the influence of emotions. And that is very true – as humans, we rely not only on our rational thinking and reasoning, but our emotional response as well. “Following the heart” we sometimes can make absolutely illogical from the rational point of view decisions, but it’s in our nature.

So, in order to achieve the human level of intelligence, the computers should have emotional responce – proposed the Professor of the Massachusetts Institute of technology, Marvin Minsky. And in 1995 the concept of affective computing was introduced by Picard.

As we stated before, nonverbal communication carries the biggest part of information. Our brain analyses not only the spoken words, but how they were pronounced, with what rhythm and intonation.

The combination of abilities of computers to analyse the emotional state through voice and face brought fascinating results – the system of automatic depression diagnosis. Judging by the frequency, resonance peaks and the facial expression, the system could detect a depressive state with the accuracy of 81.14%.

Another extraordinary activity a machine could perform is the analysis of a mental state with a series of movement. The intelligent devices were able to check the emotional state of office stuff and car drivers when attached to the place of sitting, in other words, through their posture.

The shortcomings of AI

However, despite all the fascinating tasks AI-based programs can perform, certain downsides have been reported:

“AI often involves complex use of statistics, mathematical approaches and high-dimensional data that could lead to bias, inaccurate interpretation of results and over-optimism of AI performance” – shares the concern one of the specialists, working on WHO research.

On top of that, the lack of information transparency and data usage remains a problem, making a lot of people treat AI with cautiousness and even distrust.

All in all, Artificial Intelligence became an irreplaceable tool. However, its ability to compete with human specialists to manage our mortal lives we will see very soon:)